This is the story of how we assess risk and reduce it to tolerable levels for Jenkins upgrades.

My team is responsible for keeping 32 Jenkins controllers up-to-date and supporting the builds they run. Widespread breakages are impactful for users and can completely exhaust the team’s capacity. Further, Jenkins is stateful and difficult to roll back. All of this creates a strong incentive to minimize impact with research and prevention.

This process has been evolving for years. It worked particularly well with Jenkins 2.332.1. Let’s dive in.

Risk Discovery

The Jenkins community does an excellent job crafting release notes. Most points in this release sound safe, but two grab my attention:

- Upgrade the Guava library from 11.0.1 to 31.0.1.

- Remove support for plugins written in JRuby or Jython.

These are big changes. Let’s take a moment to talk about why.

Guava

Guava before 21.0 used an aggressive deprecation-and-removal policy.

As an example, Objects.firstNonNull(T first, T second) was removed from guava 21.0.

This method had been in place since guava 3.0.

Any library that was compiled against guava 3.0 through guava 20.0 could have used this method.

If guava 21.0 or later is on the classpath for our running application, we will get a runtime exception.

Years ago guava was inadvertently upgraded in our internal platform from 19.0 to 21.0, causing widespread breakage. The response was to pin it back to 19.0, where it still remains today. I’m worried when I see guava is jumping 20 versions at once. Very worried.

JRuby/Jython Plugins

The Jenkins community wrote an excellent blog post about removing ruby-runtime. Risk assessment is easy here, because the post includes a list of impacted plugins. We automatically consider these plugins highest risk and will work to remove them before this upgrade.

Ranking Plugins by Risk

We have a list of high-risk plugins already by way of the blog post. Guava is a different case though. It’s been available to every plugin for more than a decade, so it could be used anywhere.

The Jenkins release notes also include this reassuring statement:

Plugins have already been prepared to support the new version of Guava.

This is great news for plugins that continue to be distributed. Unsupported plugins, such as ruby-runtime, will obviously not be updated. We must have a way to categorize our plugins.

Buckets

I think of plugins as fitting into three buckets.

Managed plugins are plugins we automatically upgrade on every rollout. Anything managed is not a concern for guava, so we can safely ignore everything in this bucket.

Unmanaged plugins result from users installing plugins we don’t automatically upgrade. These end up out-of-date, so there could be issues with guava. The remedy is to upgrade through the plugin manager. Unmanaged plugins present some risk, but we can expect fast remediation. We won’t spend much time here.

Deprecated and suspended plugins are the highest risk. Upgrading only to find out a deprecated, popular plugin is broken is a bad situation. One option is to do an emergency migration of every job using the plugin. Another option is to fork the abandoned plugin, fix an unfamiliar codebase, publish a release internally, and do a subsequent upgrade. I have felt the stress of both options; neither are good.

Now that we know which kinds of plugins are the highest-risk, we have to identify them.

Plugin Categorization

Plugins we build in-house are built continuously, so we don’t need to worry about runtime guava issues. We know from the release notes that plugins still listed on the Jenkins update center are ready for guava. Everything left over is risky.

Left over plugins have been abandoned, and we cannot count on them working after the guava upgrade. We add these plugins to our high-risk list and begin working to reduce that risk.

Reducing Risk

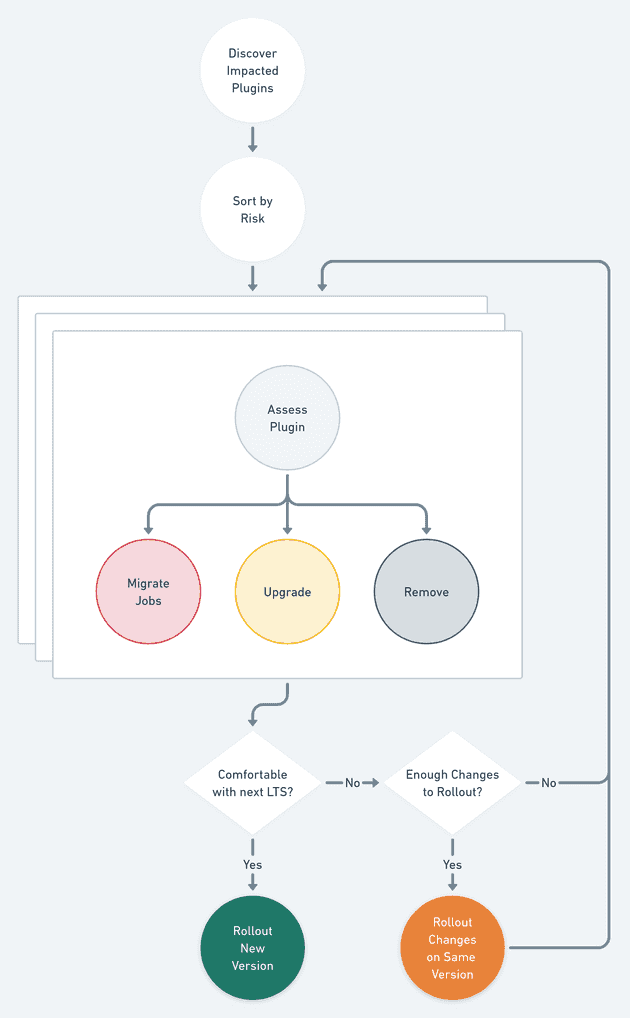

Now that we have our list of high-risk plugins, we can start to mitigate. Basically we are going to:

- Discover the impact of each risky plugin

- Use our judgment to choose a path forward

- Rollout the current version with changes when enough changes are ready

- Upgrade once we feel comfortable with the risk remaining

Visually, it looks like this:

Usage Signal

To migrate or remove plugins efficiently, we must know which jobs are using them. The Jenkins plugin-usage plugin does a great job of surfacing where many plugins are used (but not all — it has some blind spots).

In February 2021 I added API support to plugin-usage. We lean on this heavily to assess the impact of plugins. A simple wrapper script calls the API on each Jenkins controller, finds the plugin, and outputs known usage as a CSV.

$ ./plugin-usage grails

$ head -n 4 grails-usage.csv

controller,last_completed_at,job_url

argon,2022-05-02T12:51:26-07:00,path-to-argon/job/publish-candidate

boron,2022-01-02T08:03:13-07:00,path-to-boron/job/E2E-test-suite

carbon,(never),path-to-carbon/job/run-contract-tests-clonedThis CSV has the necessary context to prioritize further. Additional discovery is easy, because the link to the job is included.

This part of the process is an art. Some plugins report no usage at all and are easy to remove. Others may be used by 1,000 jobs that last ran today, making removal non-trivial. Or there might be 100 usages, but only four jobs that ran since 2017.

In a big enough system, you never know what you’ll find.

Intermediate Rollouts

We do plugin removals and unmanaged plugin upgrades by rolling out the already-deployed Jenkins version again. Our server deployment is responsible for uninstalling plugins. Unmanaged upgrades are queued up adhoc, but require a restart to take effect.

This is another art form. From start to finish we removed 16 plugins and upgraded 60 unmanged plugins. Our tooling meaningfully reduces risk, but it cannot guarantee we will catch everything. Unintended consequences from upgrades and removals mean scrambling to remediate. Intermediate rollouts allow us to limit the number of changes we roll out together.

We did three intermediate rollouts of Jenkins 2.319.3 before upgrading to 2.332.3.

Upgrade

With all the highest risk plugins addressed, we proceeded to upgrade. As of May 18, Jenkins 2.332.3 is running on all our controllers. There was only one missed plugin - log-parse - and it already had a fix available. Within an hour it was upgraded, or queued for upgrade, on all impacted controllers.

Conclusion

Jenkins is a much different system than the other services we operate. It is stateful, challenging to roll back, and has been effectively pulling together community contributions for a generation. The community does a wonderful job of being clear about what is not supported, and supporting well what is supported. As a result we have evolved our rollouts to meet the challenges it presents. I’m happy with the tradeoffs we have chosen and the progress we continue to make.